An Attention-Based Spatio-Temporal Neural Operator for Evolving Physics

Published in Machine Learning: Science and Technology, 2025

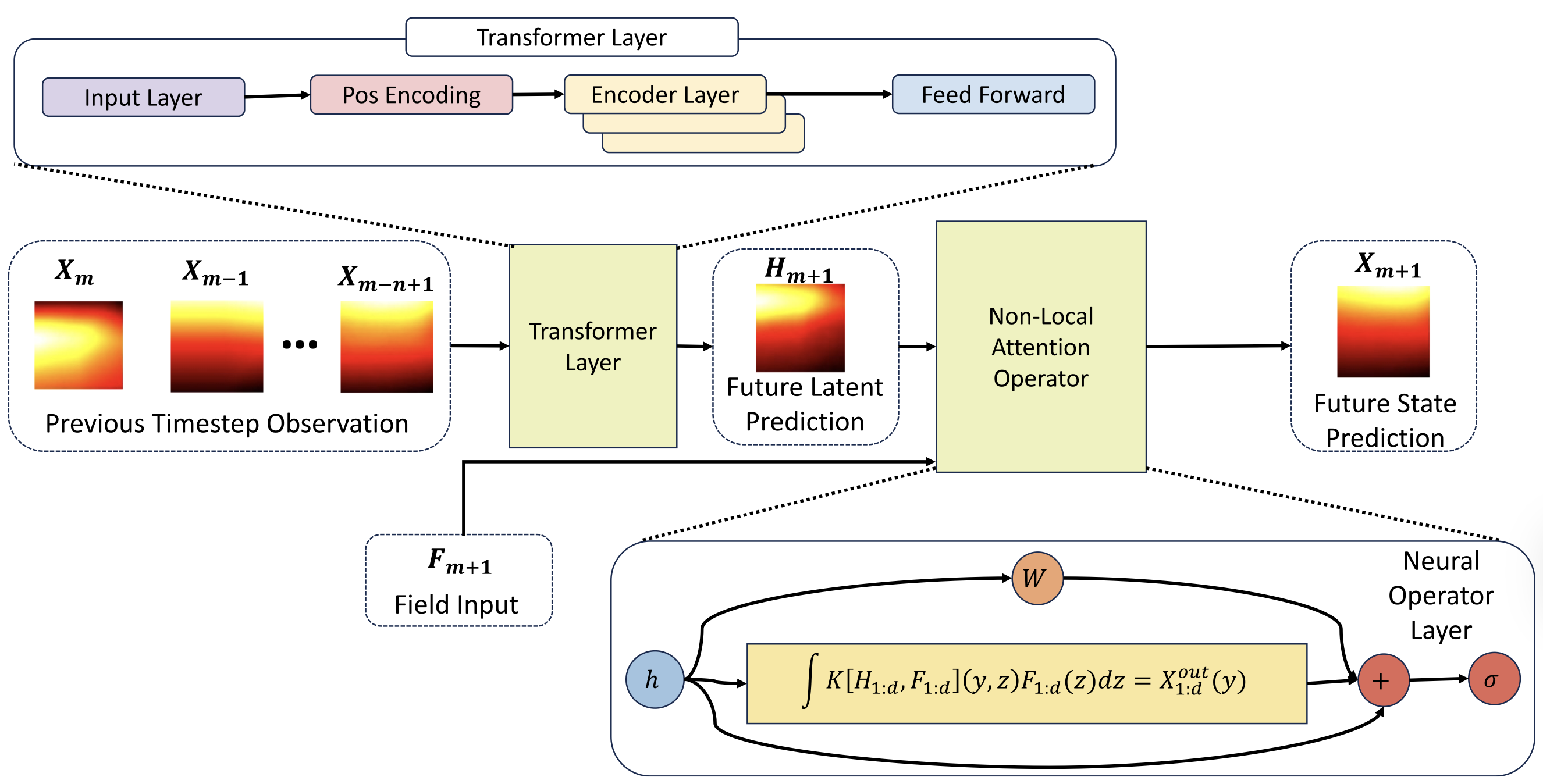

In scientific machine learning (SciML), a key challenge is learning unknown, evolving physical processes and making predictions across spatio-temporal scales. For example, in real-world manufacturing problems like additive manufacturing, users adjust known machine settings while unknown environmental parameters simultaneously fluctuate. To make reliable predictions, it is desired for a model to not only capture long-range spatio-temporal interactions from data but also adapt to new and unknown environments; traditional machine learning models excel at the first task but often lack physical interpretability and struggle to generalize under varying environmental conditions. To tackle these challenges, we propose the attention-based spatio-temporal neural operator (ASNO), a novel architecture that combines separable attention mechanisms for spatial and temporal interactions and adapts to unseen physical parameters. Inspired by the backward differentiation formula, ASNO learns a transformer for temporal prediction and extrapolation and an attention-based neural operator for handling varying external loads, enhancing interpretability by isolating historical state contributions and external forces, enabling the discovery of underlying physical laws and generalizability to unseen physical environments. Empirical results on SciML benchmarks demonstrate that ASNO outperforms existing models, establishing its potential for engineering applications, physics discovery, and interpretable machine learning.

Keywords: spatio-temporal neural operator, uncertainty quantification, scientific machine learning, out-of-distribution generalization, interpretability, attention mechanism, PDE modeling

Recommended citation: Karkaria, V., Lee, D., Chen, Y.-P., Yu, Y. & Chen, W., "An Attention-Based Spatio-Temporal Neural Operator for Evolving Physics. " IOP Machine Learning: Science and Technology, Nov. 2025; 6 045036.

Download Paper